Millions of people experience photosensitivity, where flashing lights and patterns can trigger adverse health reactions like seizures. On social media, flashing GIFs and autoplaying videos create significant accessibility challenges. Our research paper, “Not Only Annoying but Dangerous”, showcases user-centered design solutions for a more inclusive online experience. As a key member of the research team, I facilitated co-design workshops, documented observations, conducted thematic analysis of user needs, performed the literature review, and contributed to the paper’s writing. Collaborating with photosensitive individuals and UX designers, we developed practical interventions to create safer social media experiences.

.png)

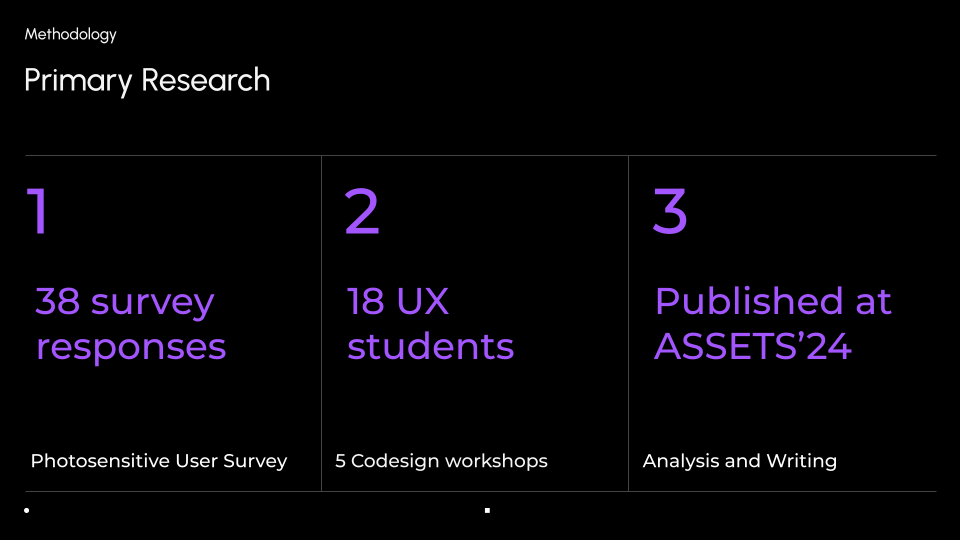

To comprehensively understand the challenges faced by photosensitive users and design effective protections, this research employed a mixed-methods approach.

.png)

This section presents the key findings from our user survey and co-design workshops, highlighting the needs of photosensitive users and opportunities for design intervention.

Our survey, distributed via social media and disability advocacy networks, explored the experiences of 38 photosensitive respondents. It should also be noted that while our sample is small, it reflects the difficulty in reaching this rare group, many of whom avoid social media platforms due to legitimate safety concerns.

.png)

Key findings reveal significant challenges and coping strategies:

Some friends add content warnings for flashing graphics (P40).

.png)

Our co-design workshops with UX design students, some of whom self-identified as photosensitive, provided valuable qualitative insights. These firsthand perspectives enriched the discussions and ensured the proposed solutions were grounded in lived experience.

Key themes and ideas emerging from the workshops include:

Based on our research findings, we propose a multi-layered ecology of protections to address the complex challenges faced by photosensitive users. This approach addresses the issue at multiple levels, from policy and system design to user empowerment and community action. While we identified 21 features in the workshop activities, for the sake of brevity we will focus on some of the most frequently noted features. The UI design is based on low-fidelity prototypes and discussions from workshops, along with thematic analysis of primary research.

The survey revealed that current reporting mechanisms are inadequate, with no specific category for dangerous flashing content. Existing options are often irrelevant, ineffective, and fail to differentiate between malicious intent and accidental sharing, highlighting the need for a new approach to reporting photosensitivity triggers. Improved reporting could provide platforms with valuable data about the prevalence and types of triggering content.

This solution enhances the reporting mechanism by adding distinct options for strobes and flashing Lights to better capture specific photosensitivity triggers. Users can also identify content as malicious or unintentional and provide additional context. After reporting, users receive confirmation, options to block or unfollow the user, and instructions for immediate relief, such as looking away from the screen.

While effective enforcement and verification of photosensitivity reports can be challenging, and handling a large volume of reports may require significant resources, existing content moderation systems for issues like nudity and violence demonstrate that large-scale reporting is already being managed, though potentially inefficiently. This suggests that similar infrastructure could be adapted and improved to address photosensitive content.

Participants wanted system-wide autoplay controls that override app settings, but ad-driven platforms may resist this. Instead they also proposed a full-screen graphic filter to prevent exposing users to triggering content. Existing tools for flashing graphics tend to be browser based which means they do not cover social media which tends to be app based.

Real-time System-Level Graphic Filter: A filter that operates at the system level (meaning it works regardless of the specific app being used) and directly alters the pixels being displayed on the screen. The filter can use a frame-by-frame detection algorithm (like South et al.'s) to identify potentially dangerous changes in luminance (brightness) and color (red-shift) between frames.

The workshops repeatedly touched upon the need for more granular content controls and user autonomy. App filters which are customizable and can easily be toggled were also discussed. Examples included the ability to blur, dim and pixelate content. Triggering content could be identified by existing, though sometimes inconsistent, community tags, supplemented by user reports. Furthermore, providing sufficient context about photosensitivity is essential, as users may not recognize their own symptoms, often associating it exclusively with epilepsy.

.png)

To prevent accidental sharing of harmful GIFs, especially within close-knit online communities, we propose adding extra steps and poster-end warnings to the sending process. It encourages users to be more mindful of the content they share and consider the potential impact on others. This addresses the workshop discussions about creator responsibility and the need for greater awareness of photosensitivity. It also acknowledges the reliance on community support highlighted in the survey findings, as it empowers users to protect their friends and family.

The example below demonstrates a photosensitivity warning modal that appears when a user attempts to send a flashing GIF in a direct message. The sender retains the option to send the GIF after being warned. A participant noted that their solution draws inspiration from Slack's “Notify Anyway” prompt.

The movie Spider-Man: Into the Spider-Verse and its trailers, known for their fast cuts and white strobes, exemplify the potential for photosensitivity triggers in media. This is particularly relevant because such content often proliferates across various platforms, including social media sites like TikTok, Instagram Reels, and YouTube Shorts, which often encourage endless scrolling and passive consumption.

To provide users with greater control over potentially triggering video content, we propose a warning overlay. As shown in the accompanying image, before a flashing video begins playing, a dark semi-transparent modal appears on the video player interface. This modal includes:

.png)

This solution addresses the need for granular content control, crucial given varying photosensitivities. Different warning levels empower users to customize their experience and make informed choices. Leveraging community feedback, particularly via report counts, supplements automated detection and builds upon existing reliance on community support.

Our research reveals a concerning dissonance. Social media, intended for connection, can inadvertently isolate photosensitive users. This is due to inadequate platform features and the prevalence of harmful flashing content, particularly in short-form videos. This leaves users feeling worried and vulnerable.

To address this, we propose a range of solutions visualized across diverse digital contexts—social media feeds, direct messages, GIF databases, and short-form content platforms. I created these visualizations to demonstrate the various ways users encounter flashing content and illustrate how our proposed designs offer feasible improvements.

While further evaluation with photosensitive users is crucial, this research is grounded in the lived experiences of both photosensitive and non-photosensitive users, gathered through co-design workshops that fostered a community-driven approach. We believe that implementing the proposed solutions and prioritizing user well-being can lead to a more accessible online environment.

The full research paper titled “Not Only Annoying but Dangerous”: devising an ecology of protections for photosensitive social media users can be read here.